Manthan Patel | Lead Gen Man

AI and Lead Gen Talk

👽 | AI Agents and Automation

🚀 | Lead Generation

⚡️ | Everything n8n and AI Agents👇🏻

Recent Posts

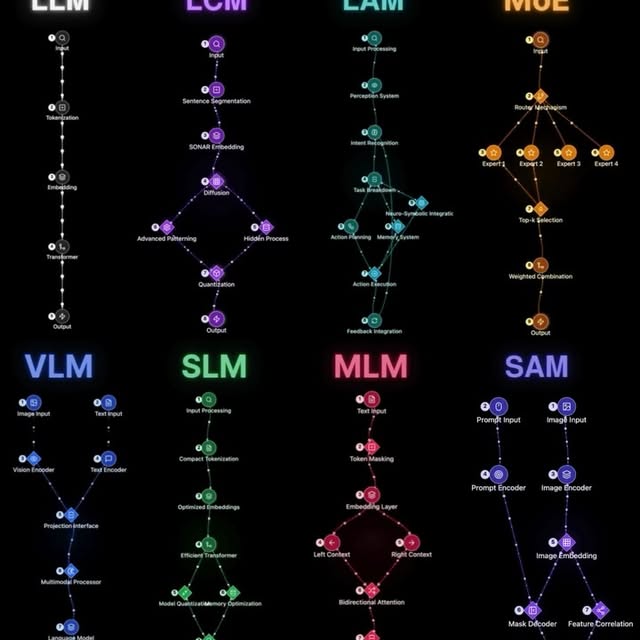

LLMs are AI models, but not all AI models are LLMs. Building upon traditional approaches, these eight specialized models advances AI’s ability to understand, reason, and generate across different domains and modalities. Here’s architectures of these 8 state-of-the-art models: 1️⃣ LLMs (Large Language Models) These foundational models process text token-by-token, enabling everything from creative writing to complex reasoning. 2️⃣ LCMs (Large Concept Models) Meta’s newer approach encodes entire sentences as “concepts” in SONAR embedding space, transcending word-level processing. 3️⃣ VLMs (Vision-Language Models) These multimodal combine visual and textual understanding to interpret images and generate text about them. 4️⃣ SLMs (Small Language Models) Compact yet powerful models optimized for edge devices with tight energy and latency constraints. 5️⃣ MoE (Mixture of Experts) These models activate only relevant expert networks per query, dramatically improving efficiency while maintaining performance. 6️⃣ MLMs (Masked Language Models) The OG bidirectional models that look at both left and right context to understand meaning in text. 7️⃣ LAMs (Large Action Models) Emerging models that bridge understanding with action, executing tasks through system-level operations. 8️⃣ SAMs (Segment Anything Models) Foundation models for universal visual segmentation with pixel-level precision. Matching the right architecture to the right task is essential. It saves time, boosts productivity, and creates a more natural flow in AI-human interactions. Over to you: What specialized AI architecture do you think would benefit your work the most?

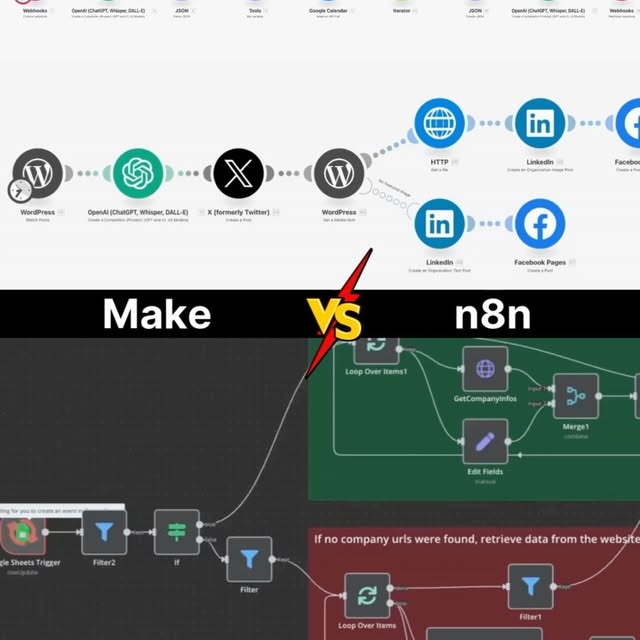

Make vs n8n ↓ Price: 🟩🟩🟩🟩 >> 🟩⬜️⬜️⬜️ Ease of use: 🟩🟩⬜️⬜️ << 🟩🟩🟩🟩 Integrations: 🟩🟩⬜️⬜️ << 🟩🟩🟩🟩 Flexibility: 🟩🟩🟩🟩 >> 🟩🟩⬜️⬜️ — Make is great for getting started with automation. But you will be frustrated when things get serious: - your bill will skyrocket - you’ll struggle to manage advanced workflows - you’ll be limited to Make native functionalities That’s why more and more organizations prefer n8n today. Now, n8n vs LangGraph 𝗻𝟴𝗻 (𝗩𝗶𝘀𝘂𝗮𝗹 𝗪𝗼𝗿𝗸𝗳𝗹𝗼𝘄 𝗔𝘂𝘁𝗼𝗺𝗮𝘁𝗶𝗼𝗻) - Creates visual connections between AI agents and business tools - Flow: Trigger → AI Agent → Tools/APIs → Action - Solves integration complexity and enables rapid deployment - Think of it as the visual orchestrator connecting AI to your entire tech stack 𝗟𝗮𝗻𝗴𝗚𝗿𝗮𝗽𝗵 (𝗚𝗿𝗮𝗽𝗵-𝗯𝗮𝘀𝗲𝗱 𝗔𝗴𝗲𝗻𝘁 𝗢𝗿𝗰𝗵𝗲𝘀𝘁𝗿𝗮𝘁𝗶𝗼𝗻) by LangChain - Enables stateful, cyclical agent workflows with precise control - Flow: State → Agents → Conditional Logic → State (cycles) - Solves complex reasoning and multi-step agent coordination - Think of it as the brain that manages sophisticated agent decision-making Over to you: What are you using right now?

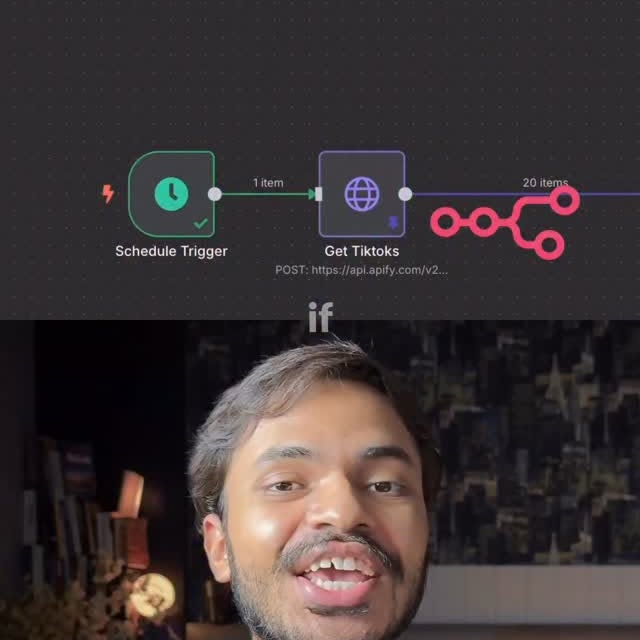

Just starting with n8n? Here are 3 essential nodes you’ll want to learn right away: 1️⃣ Set Node – Think of this as your data editor. You can add, modify, or rename fields in your workflow output. Super helpful when you need to clean up data or create new variables on the fly. 2️⃣ Filter Node – This is your logic gate. It lets only the data that meets specific conditions move forward. Perfect for narrowing down results and building smarter, cleaner automations. 3️⃣ HTTP Request Node – This one connects n8n to the rest of the internet. Whether you’re integrating with an API, a service like Apify, or a tool that doesn’t have a native n8n node — this node gets the job done. Understanding just these three gives you the foundation to build way more powerful and flexible workflows, even as a beginner. 🚀 Learn these well, and you’re already ahead of the curve.

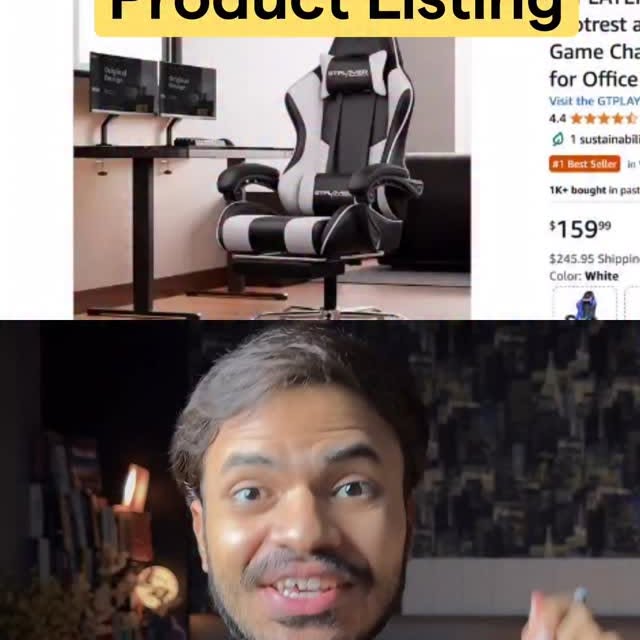

👇 Selling on Amazon? You need to check this out: 24.online 💡 It’s an AI-powered tool analyzes your entire Amazon listing (images, title, keywords, description) and gives you detailed feedback on how to improve it. ✅ Upload your product photo or paste your listing URL ✅ Instantly get a score with strengths, weaknesses, and suggestions ✅ AI checks everything, even image quality and keyword relevance ✅ Designed for Amazon sellers, marketplaces, and small businesses Perfect for amazon sellers or even looking to boost existing listings, this tool saves you from hiring a full team and helps you sell more, faster. 🔗 Try it now at 24.online and see how your listing improves with AI.

I couldn’t find qualified leads fast enough. (Here’s how I fixed it in 10 minutes using Apollo’s AI) I didn’t just want another contact database. Or generic email templates that prospects would ignore. I wanted a system that actually works. And now it finds my ideal customers before my competitors do. 1. I logged into @useapollo.io 2. Used AI Power-ups to research my best customers 3. Let Lookalikes find 500 similar companies in seconds 4. Had AI Email Writer craft personalized messages based on real signals Done. My own AI-powered sales engine. Finds the right people. Writes emails they actually read. Books meetings while I sleep. No more manual research. No more generic outreach. Just smarter prospecting. This is literally what sales should feel like. See for yourself: www.apollo.io (seriously, the free plan alone will blow your mind)

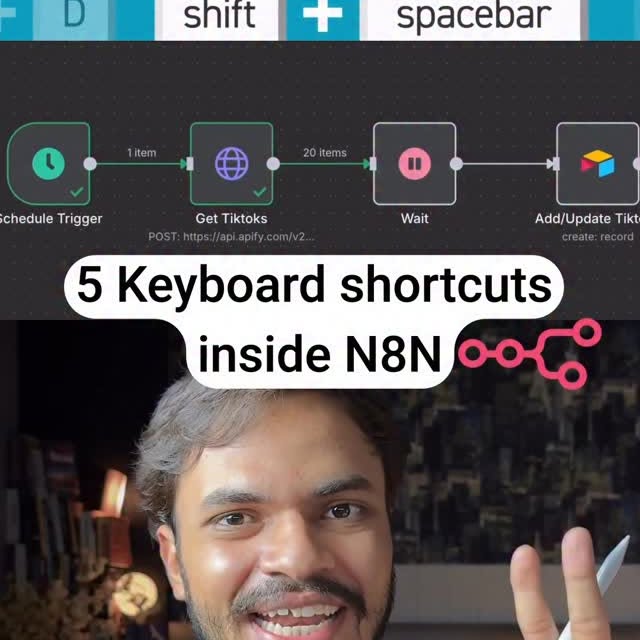

🎟️ Comment “n8n” and I’ll send you the full keyboard shortcuts list straight to your DMs! 💡 Save time with these 5 quick n8n keyboard shortcuts: 1️⃣ Tab – Open or close the node panel 2️⃣ P – Pin or unpin node data (super useful for debugging) 3️⃣ D – Activate or deactivate a node instantly 4️⃣ Fn + F2 – Rename any node quickly 5️⃣ Ctrl + Enter / Cmd + Enter – Run your workflow without touching the mouse Small shortcuts, big time savers. Perfect if you’re building often in n8n.

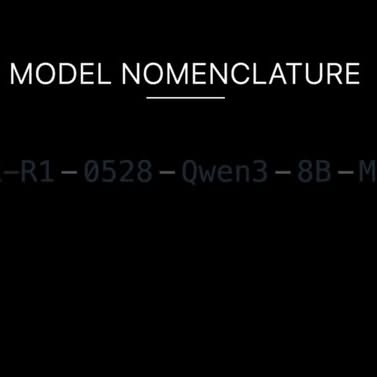

How to Read AI Model Names (Explained Like a 5-Year-Old) “Open source AI is the path forward” - Mark Zuckerberg. But first, you need to know how to decode names like “DeepSeek-R1-0528-Qwen3-8B-MLX-4bit” because each part tells you exactly what hardware you need and what performance to expect. Here’s what each part actually means: DeepSeek-R1 = The Company & Model Type DeepSeek is the company name. R1 is their reasoning model that uses reinforcement learning. It’s their flagship AI that competes with OpenAI o1. 0528 = Version Date This indicates the May 28, 2025 release. Newer versions have better performance. This update improved accuracy from 70% to 87.5% on mathematical reasoning tests. Qwen3 = The Engine The base architecture from Alibaba Cloud that this model builds on. Qwen supports over 100 languages and provides the foundation for DeepSeek’s reasoning capabilities. 8B = Size (8 Billion) The model has 8 billion parameters. This size runs efficiently on consumer hardware while maintaining strong performance. Requires about 16GB RAM for full precision. MLX = Special Optimization Apple’s machine learning framework optimized for M1/M2/M3/M4 chips. Enables fast local inference using unified memory architecture without CPU-GPU data transfers. 4bit = Compressed Version Quantization reduces the model from 16GB to approximately 4GB through 4-bit precision. Maintains most capabilities while drastically reducing memory requirements. Why This Matters: Understanding model names helps you pick the right AI for your needs. This specific combo means you can run advanced AI on a regular MacBook without cloud subscriptions or expensive hardware. Next time you see a model name, you’ll know exactly what you’re looking at. Save this for reference when choosing AI models. Over to you: What model names have confused you lately?

AI Agent Architecture The diagram below illustrates the core architecture of AI agents. Step 1: Perception The agent processes inputs from its environment through multiple channels. It handles language through NLP, visual data through computer vision, and contextual information to build situational awareness. Modern systems incorporate audio processing, sensor data, and state tracking to maintain a complete picture of their surroundings. Step 2: Reasoning At its core, the agent uses logical inference systems paired with knowledge bases to understand and interpret information. This combines symbolic reasoning, neural processing, and Bayesian approaches to handle uncertainty. The reasoning engine applies deductive and inductive processes to form conclusions. Step 3: Planning Strategic decision-making happens through goal setting, strategy formulation, and path optimization. The agent breaks complex objectives into manageable tasks, creates hierarchical plans, and continuously optimizes to find the most efficient approach. Step 4: Execution This layer mold plans into actions through intelligent selection, tool integration, and continuous monitoring. The agent leverages APIs, code execution, web access, and specialized tools to accomplish tasks. Step 5: Learning The adaptive intelligence component combines short-term memory for immediate tasks with long-term storage for persistent knowledge. This system incorporates feedback mechanisms, using supervised, unsupervised, and reinforcement learning to improve over time. Step 6: Interaction The communication layer handles all external exchanges through interfaces, integration points, and output systems. This spans text, voice, and visual communication channels, with specialized components for human-AI collaboration. What makes AI agent different from automation and workflows is the feedback loops between components. When execution results feed into learning systems, which then enhance reasoning capabilities, the agent achieves truly adaptive intelligence that improves with experience. In your view: Which component has the biggest gap between theory and practice?

I watched someone building automations today. Tab 1: n8n workflow editor. Tab 2: Make scenario builder. Tab 3: Zapier editor. He set up the same process in each with identical triggers, matching data mapping, and the same error handling steps. - In n8n, he added custom JavaScript nodes, connected several services, and combined multiple triggers in one workflow. - In Make, he arranged modules, worked with single triggers, and found workarounds for more advanced logic. - In Zapier, he stacked together Zaps, used built-in actions, and managed branching with filters and paths. He ran all three setups, checked the logs, and compared how each handled the tasks. - n8n managed the advanced steps smoothly, offered more flexibility, and didn’t add extra cost for complexity. - Make worked well for basic flows but needed extra effort for complex logic. - Zapier was quick for simple automations, but hit limits with multi-step or custom requirements. He kept the n8n workflow. Like a psychopath. It’s me.

If you’re running automations that handle sensitive data, here’s how I’m implementing human-in-the-loop workflows to add a safety layer. Here’s the tea ☕️ I’ve been building automated workflows for clients, and when you’re dealing with sensitive operations - payment processing, customer communications, data modifications - you may need that human verification step. That’s where Velatir comes in. It’s a human-in-the-loop platform that adds approval checkpoints to any automation. What makes this different from basic approval systems: → Customizable rules, timeouts, and escalation paths → One integration point, no need to duplicate HITL logic across workflows → Full logging and audit trails (exportable, non-proprietary) → Compliance-ready workflows out of the box → Support for external frameworks if you want to standardize HITL beyond n8n The setup took about 5 minutes - sign up, get API key, add to your n8n workflow. One interface, one source of truth, no matter where your workflows live. Question for my network: What’s the riskiest automation you’re running without human oversight?

Similar Influencers

Quantum Computing & Tech ⚛️

Prompted | Intelligenza Artificiale

Growth Forge AI

AI Strategies | Business Growth

Awakened Truths

CNET

Quantum | Agência de resultado

Physics Funny

the calculus guy

Rachel Barr | Neuroscientist

TECtalks

𝐂𝐡𝐢𝐩𝐮𝐥𝐚𝐫𝐢𝐭𝐲 ™ | 𝐓𝐞𝐜𝐡𝐧𝐨𝐥𝐨𝐠𝐲 | 𝐘𝐨𝐮𝐫 𝐄𝐝𝐠𝐞

Early Startup Days

Taylor Perkins (Cult Daddy)

Space Cameo

Nobel Prize

SCIENCE & TECHNOLOGY

Sinéad Bovell

Nathan Hodgson

AI Folks

Startup Archive

Space | universe | knowledge

Billy Carson

BBC News

David Marsh | Space for Earth

Lucio Arese

The Science Fact

NPR